Intro

It’s July 12th 2011 around 2:30pm EST as I start to write this. Anything interesting in the world of VMware virtualization go on today? :)

Riiight, VMware vSphere Version 5 has been announced! Along with many other products from VMware. Definitely go to VMware.com and EMC.com to see all the “awesomesauce” as one crazy Canadian puts it. <\shameless_plug>

As with everyone else’s blog, these words are my own and reflect my state of mind and not that of my employers. (RSA, the Security Division of EMC for those not keeping up)

iSCSI and vDS

I’m going to talk about virtual distributed switches (vDS) and how I set up my lab to get rid of traditional vSwitches. In my current lab of two ESXi hosts and some Iomega storage, I strive to make it look as “customer like” as possible. I don’t do the typical vCenter install that you click,click,click thru letting it install SQL Express and walk away. Nope, I do stuff like installing a full-blown SQL server and setting up vDS!

I wanted to separate out my VMkernel from my iSCSI. I wanted to put them on separate switches. vDS seemed logical for my setup as I could more easily play around with jumbo frames and all sorts of other cool stuff that’s come out with V5 like Netflow and port mirroring. Mind you, I’m probably not going to be dealing with that stuff on my iSCSI network but the option will be there..

Besides, vSwitches, as useful as they are, are kinda passé and I wanted to learn how to configure a vDS. So, I went searching around on the Interwebs and found a great article from Mike Graham at his blog “Mike’s Sysadmin Blog”. It showed how to set up iSCSI traffic to go over vDS. But it had a bunch of workarounds that required you to go into the command line on the ESX/ESXi server to “fix” things to work.

An additional article I highly recommend reading is from my friend Scott Lowe on Jumbo Frames and iSCSI+vDS. Scott’s article is based on vSphere V4 and like Mike Graham’s article on iSCSI and vDS, it lays out the required tweaking at the command line to make things work. In both cases they go into the requirements around modifying the VMkernel to start talking Jumbo Frames. According to my review of the vSphere V5 RC docs, I believe that’s no longer needed.

Well, in vSphere V5, you can do it all via the GUI! (and that means, of course, you can probably build a PowerCLI script that’ll do it!)

Step By Step

Start by creating a new vDS. We’ll call it “iSCSI dvSwitch”

Select the 5.0.0 version

Here I’ll set the number of dvUplink ports = 2. This means I’ll use 2 physical adapters PER HOST.

Now I’ll select what physical adapters I’ll use. In the example below, I’m using vmnic4 and vmnic5 on each of my Dell R610’s

So, now you’ll see the dvUplink’s ready to roll.

Just to clarify

dvUplink1 –

Host1/vmnic4

Host2/vmnic4

dvUplink2 –

Host1/vmnic5

Host2/vmnic5

The picture above will create default port groups. We’re going to create two of them. You’ll need to adjust the Teaming and Failover as follows.

From the docs:

If you are using iSCSI Multipathing, your VMkernel interface must be configured to have one active

adapter and no standby adapters. See the vSphere Storage documentation.

Setting things up as outlined below will ensure your iSCSI adapter is set up using a compliant portgroup policy.

Portgroup1 –

Active Uplink = dvUplink1

Unused Uplink = dvUplink2

Portgroup2 –

Active Uplink = dvUplink2

Unused Uplink = dvUplink1

When that’s done, the switch should look like this:

Now, in order to talk iSCSI to the iSCSI host, we need to bind a VMkernel to each port group. On each host, go to Configuration…Networking…vSphere Distributed Switch and down to the iSCSI-dvSwitch. Open “Manage Virtual Adapters…”

Create a new Virtual Adapter with a VMkernel.(vmk1)

Assign IP address

Mapped to Portgroup1

Repeat all these steps for your other hosts.

Configuring iSCSI

If this was vSphere V4, you’d have to be doing some command line stuff to get the rest of this to work. Not in vSphere V5!

First step, we need an iSCSI Storage Adapter. For your first host, open Configuration…Storage Adapters and click on Add…

Now click the OK button to add the iSCSI Adapter. You see the following dialog box. Click OK

Ok, you now have your iSCSI adapter and it’s time to configure it. Click on Properties…

The dialog box opens and click on the Network Configuration tab.

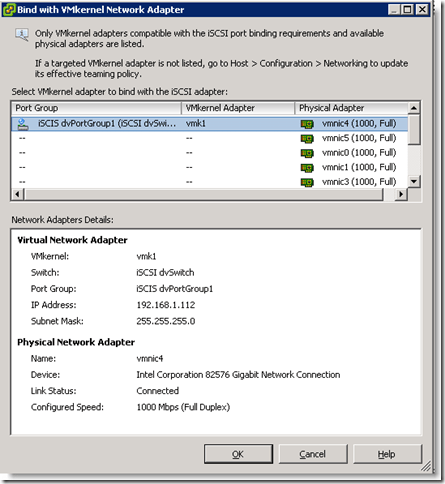

Click the Add… button

You should now see something like the following:

Click on OK and you get this screen. Here’s where you’ll see the compliance portgroup policy check I pointed out earlier!

Now it’s time to set up some iSCSI connections. Click on the Dynamic Discovery tab and then on Add… I’ll add two connections to my two Iomega IX4-200R devices that I’ve preconfigured iSCSI disks on.

After I’ve added my connections and clicked on Close, I’ll be prompted to rescan for devices. Click on Yes.

In my case, you’ll see the two iSCSI disks show up in the Details for the iSCSI Storage Adapter

Now let’s go over to Storage and see the new datastores. You MAY have to click on Refresh to get a clean view.

Jumbo Frames

Lots has been talked about with regard to Jumbo Frames. Scott Lowe’s article touches on it for V4. Jason Boche has an awesome article on whether they actually help or not from a performance standpoint. I’ll leave that up to you as to whether it works or not.

As a bit of a recap, there’s two places in vSphere that Jumbo Frames need to be enabled.

- The VMkernel. Specifically, the NIC attached to the VMkernel being used for iSCSI traffic

- The vDS switch itself

Note that for Jumbo Frames to really work, you need to have everything from the vSphere level all the way thru the switches and the disk array supporting Jumbo Frames, otherwise it’ll either not work or performance will suffer.

VMkernel MTU Settings

Thankfully, in V5, this is all now settable via the GUI. Let’s start with the VMkernel. Go to Inventory…Hosts and Clusters…

Click on the host and then the Configuration Tab. Select Networking… and then Virtual Distributed Switch.

Now, for the iSCSI vDS, click on Manage Virtual Adapters… You’ll see the VMkernel, click on that and then Edit.

Under the General tab, you’ll see the NIC Settings and the MTU value. Set that to 9000.

Previously, this was a funky set of command line steps, all called out in Scott’s article. MUCH simpler now.

UPDATE!

Want to change this from PowerCLI? Well, you CAN!

get-vmhost $host | get-vmhostnetworkadapter -vmkernel -name vmk1 |set-vmhostnetworkadapter -mtu 9000

[/sourcecode]

Virtual Distributed Switch MTU Settings

Now, let’s take a look at the vDS. Open the Inventory…Networking page. Select your vDS and click Edit Settings…

You’ll see the MTU value (Default 1500). Set that to 9000.

Wrap-up

Well, that’s it! You should now have iSCSI traffic moving across your vDS! Note: I haven’t tested jumbo frames and if they are truly working as advertised yet. I HAVE testing iSCSI over vDS and it works just fine. Just too much going on at the moment. If you can, please post some feedback.

So, with V5 of vSphere, VMware has continued to raise the bar in easy setup and configuration. They’ve now dropped the requirement to step into the command line of ESX(i) and run obtuse commands to get what seems like simple tasks to run. You no longer have to bind and set MTU values at the command line.

Please email me if I’m incorrect and I’ll be glad to fix this posting.

Thanks for reading!

mike